- Market Research

- Synthetic Audiences

- AI

Synthetic Audiences Rise as AI Makes Market Research Faster and More Predictive

Synthetic audiences powered by agentic AI are revolutionising market research. From static chatbots to million AI persona simulations with psychological depth, we explore how platforms are achieving 90% predictive accuracy whilst cutting research costs by 300x.

The Rise of Synthetic Audiences

Market research has long been the bedrock upon which companies build their strategies, test their products, pricing and understand their customers. For decades, the industry relied on focus groups, surveys, A/B testing and panel studies - methods that, whilst thorough, demanded considerable time, expense and logistical coordination. The process of recruiting participants, scheduling sessions and analysing responses could stretch over months, rather than weeks. Yet the landscape is shifting dramatically as artificial intelligence transforms how businesses gather consumer insights.

AI-powered market research has emerged as a compelling alternative to traditional methods. By leveraging machine learning algorithms to analyse vast datasets, predict consumer behaviour and automate insights generation, these AI systems deliver results with unprecedented speed. The technology can process millions of data points in hours, identifying patterns and trends that would take human analysts weeks to uncover. This computational approach has already revolutionised data analysis, analytics, reporting and visualization across the industry.

Yet the most transformative development in this space is the rise of synthetic audiences - AI-generated personas that simulate real consumer groups and respond to questions as though they were actual people. These digital stand-ins represent specific demographic segments, complete with psychographic profiles, behavioural patterns and decision making frameworks. The implications are profound: brands can now test marketing campaigns, validate pricing and messaging or explore market reactions without recruiting a single human participant.

What Is a Synthetic Audience?

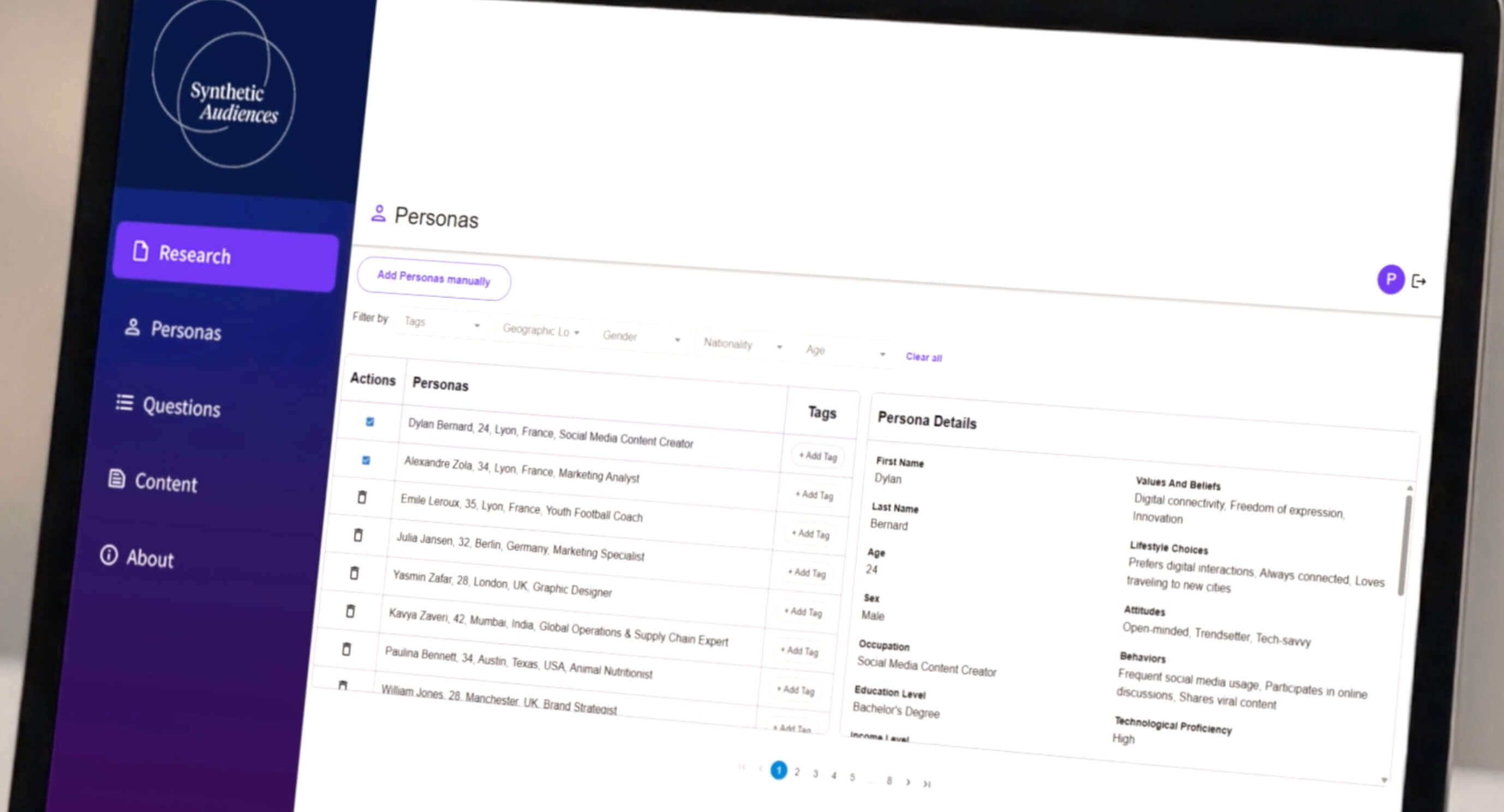

A synthetic audience comprises artificially generated consumer profiles trained on demographic, psychographic and behavioural data from real-world sources. These AI personas are designed to mimic how genuine individuals within specific segments might think and respond to various stimuli. Rather than statistical abstractions, they function as interactive digital entities capable of providing qualitative feedback, answering survey questions and even engaging in simulated conversations.

The technology draws upon large language models and machine learning frameworks to create personas with nuanced characteristics. Each synthetic individual might possess distinct personality traits, purchase histories, media consumption patterns and price sensitivities. When researchers pose questions to these audiences, the AI generates responses grounded in the data used to construct each persona, offering insights that mirror real-world consumer sentiment with surprising accuracy.

Recent validation studies have demonstrated the viability of this approach. A comparison conducted by EY revealed that synthetic audience responses aligned with traditional survey results at a 95% correlation, while Qualtrics research found that 87% of market researchers who employed synthetic responses reported high satisfaction with the outcomes. The data suggests these methods are not merely theoretical exercises but practical tools delivering meaningful results.

From Chatbots to Digital Personas

The concept of synthetic audiences predates the current wave of advanced generative AI. Early platforms in this space relied on static, rule-based systems that generated personas through predefined parameters. These tools offered researchers the ability to create audience segments with specified demographics, but the personas themselves remained largely inflexible.

SyntheticUsers, for instance, provides a platform for running qualitative research interviews with AI-generated respondents. The system creates personality profiles and allows researchers to explore user needs, pain points and behaviours without recruiting actual participants. Similarly, Empathylab works with publishers and brands to create digital twins of their audiences, enabling teams to test content and concepts before launch.

Artificial Societies, which recently raised €4.5 million in combined pre-seed and seed funding, takes a different approach by simulating social dynamics within groups. The London-based company has run over 100,000 simulations since its public launch, with investors including Point72 Ventures and Y Combinator backing the platform. The funding round reflects growing investor confidence in synthetic audience technology as a viable market research category.

Evidenza, founded by former LinkedIn and Facebook executives, specialises in B2B synthetic research. The platform recently partnered with Dentsu to integrate synthetic audiences into media planning workflows, with early results showing an 0.87 correlation with traditional research methods. SocialTrait, another player in the space, claims its simulations match real-world results 86% of the time.

Whilst these platforms demonstrated the potential of synthetic research, they operated primarily through chatbot-style interactions or predefined role-based scenarios. The personas, though sophisticated, lacked the dynamic complexity and adaptability that would emerge with the next generation of AI architecture.

The Multi-Agent Revolution

The emergence of agentic AI - autonomous systems capable of goal-directed behaviour and multi-step reasoning - has fundamentally altered the synthetic audience landscape. Rather than simple chatbots responding to isolated queries, modern platforms employ networks of AI agents that simulate complex human psychology, social dynamics and decision-making processes.

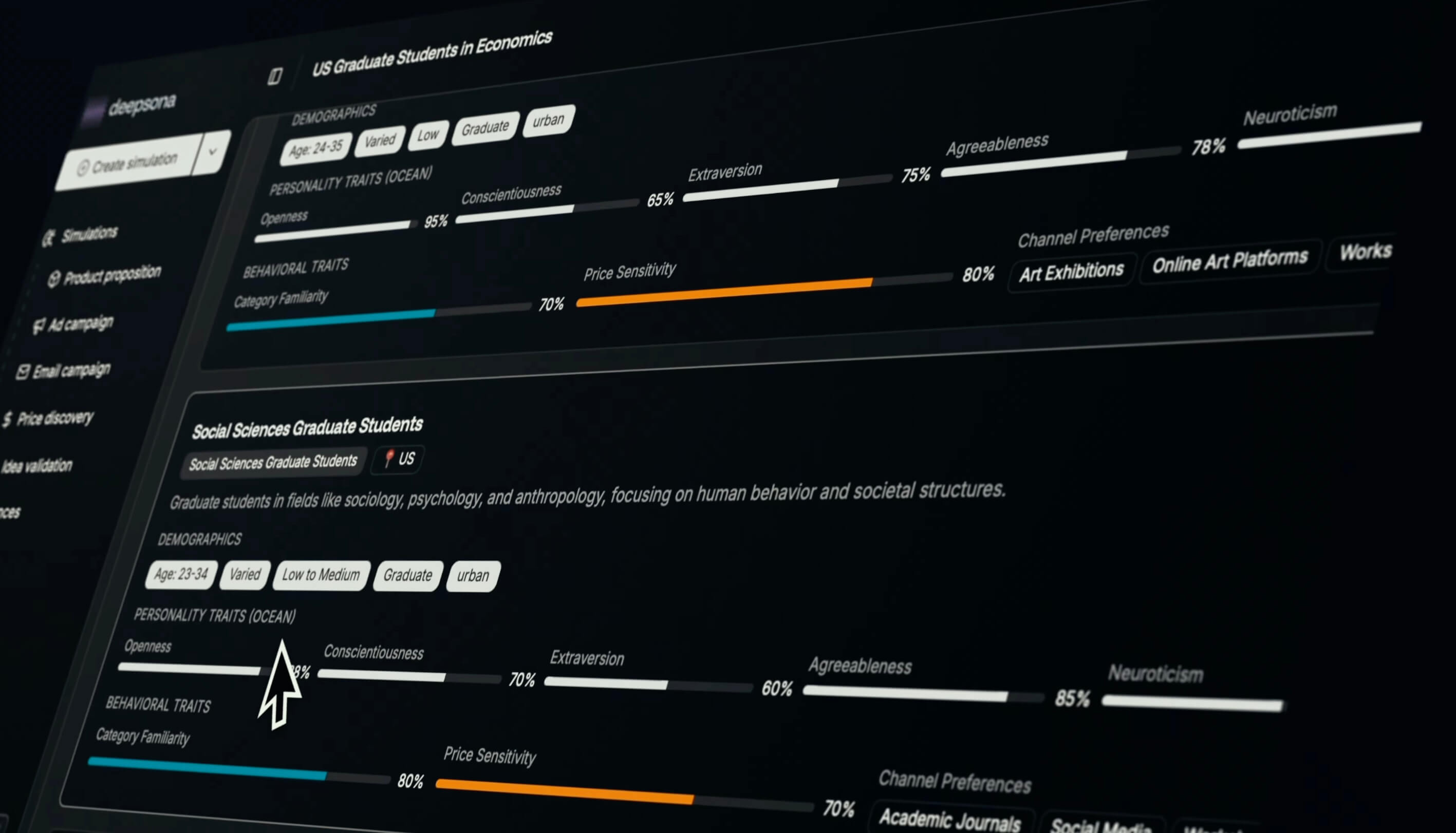

This shift has enabled synthetic audiences to exhibit far greater behavioural fidelity. For example, Deepsona - the AI market research platform for predictive consumer insights, builds personas using the Big Five (OCEAN) personality framework, layering psychographic profiles, socioeconomic factors, category familiarity and price sensitivity onto each digital individual. The platform’s Persona Factory Agent enforces complexity across multiple dimensions, creating synthetic audiences that respond not from simple pattern matching but from modelled psychological depth.

The technical architecture behind these systems represents a significant advancement. Multi-agent frameworks allow synthetic personas to maintain context across extended interactions, simulate long term behaviours and exhibit the sort of nuanced, sometimes contradictory responses that characterise actual human decision-making. Research from Google DeepMind and Stanford University demonstrated that AI agents could replicate human participants’ responses to established social science tests with 85% accuracy.

Deepsona reports that its synthetic audience simulations achieved 74-90% predictive alignment with real campaign outcomes as well as actual human responses from YouGov and GWI. The platform’s multi-trait approach, which integrates personality dimensions with behavioural modeling, aligns with emerging research indicating that psychographic depth rather than demographic breadth drives predictive accuracy. The platform allows brands to build custom audiences of up to one million AI personas, test creative concepts, pricing strategies and marketing campaign frameworks at scale. This represents a quantum leap from earlier systems limited to hundreds or thousands of personas.

The accuracy improvements stem from several factors. Agentic systems can draw upon richer training data, incorporating not just demographic information but behavioural patterns gleaned from transaction histories, social media interactions, and market research archives. They can model cognitive biases, emotional states and cultural contexts that influence purchasing decisions. Most significantly, they can adapt their responses based on the specific context of each query, rather than defaulting to generic patterns.

The Market Research Transformation

The data suggests a seismic shift in how the industry operates. According to Qualtrics’ 2025 Market Research Trends Report, 71% of market researchers believe that the majority of market research will be conducted using synthetic responses within three years. This projection reflects both the technology’s improving reliability as well as mounting pressure on research budgets.

Cost reduction stands as one of the most compelling advantages. Traditional focus groups might cost 20% more than synthetic alternatives, whilst some platforms claim cost reductions of up to 300 times per trial. The time savings prove equally dramatic - synthetic research delivers insights in hours or days, compared to the weeks or months required for traditional panel studies.

Speed and cost, however, tell only part of the story. Synthetic audiences eliminate several persistent problems that plague conventional research. Survey fatigue, social desirability bias and acquiescence bias - all distort traditional research findings. Synthetic personas, by contrast, respond based on their modelled characteristics rather than performative self-presentation. Researchers can query these audiences repeatedly without concern for respondent burnout, enabling iterative testing that would prove impractical with human participants.

The technology also democratises access to hard to reach segments. Surveying senior executives, medical specialists or niche hobbyist communities typically requires extensive recruitment efforts and substantial incentive payments. Synthetic audiences representing these groups become instantly available, allowing companies to test messaging and positioning for audiences that would otherwise remain inaccessible.

Industry adoption is accelerating rapidly. Nearly 89% of researchers are already using AI-powered tools regularly or experimentally, whilst 83% reported that their organisations plan to significantly increase AI investment in 2025. Research teams that identified as “on the cutting edge of innovation” reported larger budgets and greater organisational influence, suggesting that synthetic audience adoption correlates with strategic value.

Use cases continue to expand. Brands employ synthetic audiences to test advertising creative, refine product positioning, validate pricing strategies and model crisis communications. Publishers use them to gauge reader reactions to editorial concepts. B2B companies leverage them to understand complex buying committee dynamics. The technology’s flexibility allows it to address research questions across virtually any category or industry.

Limitations remain, naturally. Synthetic audiences excel at replicating known patterns but struggle with genuinely novel scenarios or predicting future preference shifts. They cannot replicate sensory experiences—taste, touch, smell—that influence certain product categories. Cultural nuances and rapidly evolving social contexts can prove challenging to model accurately. Most practitioners recommend using synthetic research as a first-pass filter rather than a replacement for human validation, particularly for high-stakes decisions.

The regulatory environment is also evolving. Privacy regulations such as GDPR make traditional panel research increasingly complex, as companies must navigate consent frameworks and data protection requirements. Synthetic audiences, which generate artificial data rather than collecting personal information, sidestep many of these concerns. This compliance advantage is likely to accelerate adoption, particularly for research involving sensitive topics or vulnerable populations.

Looking ahead, the trajectory seems clear. Market researchers expect that within three years, AI will predict market trends more accurately than human analysts, whilst maintaining the explanatory power that executives require. The technology will not eliminate traditional research entirely—human insights remain indispensable for exploratory studies, ethnographic observation, and understanding genuinely novel phenomena. Yet for the vast middle ground of market testing, concept validation, and iterative optimisation, synthetic audiences are becoming the default approach.

The transformation represents more than mere technological substitution. It fundamentally alters the economics of evidence-based decision-making, making rigorous market research accessible to organisations that previously could not afford it. Smaller brands can now test with the sophistication once reserved for multinational corporations. Product teams can validate dozens of concepts before committing development resources. Marketing departments can optimise campaigns with the same iterative discipline that software engineers apply to code.

The Future of Evidence-Based Decision Making

The synthetic audience revolution is not coming it has arrived. The question facing market researchers is no longer whether to adopt these tools but how to integrate them effectively into their methodologies, maintaining the rigour and insight quality that stakeholders demand whilst embracing the speed and scale that competitive markets require. Those who master this balance will define the industry’s next chapter.

Attribute every AI visit to revenue and growth.

Easy setup, instant insights.

based on real user feedback